Identifying and Preventing False Information Generated by ChatGPT

A Comprehensive Guide to Identifying and Preventing False Information Generated by ChatGPT for General Consumers

Introduction

Artificial intelligence (AI) has come a long way in recent years, and one of its most notable applications is in natural language processing (NLP). ChatGPT, a state-of-the-art AI language model, has garnered widespread attention for its ability to generate human-like text. However, despite its impressive capabilities, ChatGPT can sometimes generate false information. For general consumers, this misinformation can have significant consequences. In this article, we will discuss strategies for identifying and preventing false information generated by ChatGPT and explore the impact of such misinformation on users.

Understanding ChatGPT's Limitations

While ChatGPT is an advanced language model with a vast knowledge base, it is important to remember that it is still a machine learning model with inherent limitations. ChatGPT is trained on a diverse range of text sources, but its training data is not always perfect. As a result, the model may inadvertently learn false facts or biases present in its training data. Moreover, ChatGPT's knowledge is not up-to-date, as it is based on a snapshot of the internet up to a certain point. This means that it may lack information on recent events, discoveries, or updates on a given topic.

Impact of Misinformation

The dissemination of false information can have various negative effects on general consumers. It can lead to confusion, misinformed decisions, and even harmful actions. For instance, a user might follow erroneous advice given by ChatGPT, which could have financial, legal, or health-related consequences. Furthermore, misinformation can erode trust in AI systems, causing people to lose faith in their ability to provide accurate information.

Strategies to Identify False Information

- Cross-verification: To ensure the accuracy of information provided by ChatGPT, cross-verify its responses with other reliable sources. Check reputable websites, news outlets, or academic publications to confirm the authenticity of the information.

- Logical consistency: Examine the internal consistency of ChatGPT's output. If the generated text contains contradictions or illogical statements, it may be an indication of false information.

- Fact-checking websites: Utilize fact-checking websites to verify the claims made by ChatGPT. These platforms are designed to identify and debunk false information by providing evidence-based assessments.

Preventing False Information

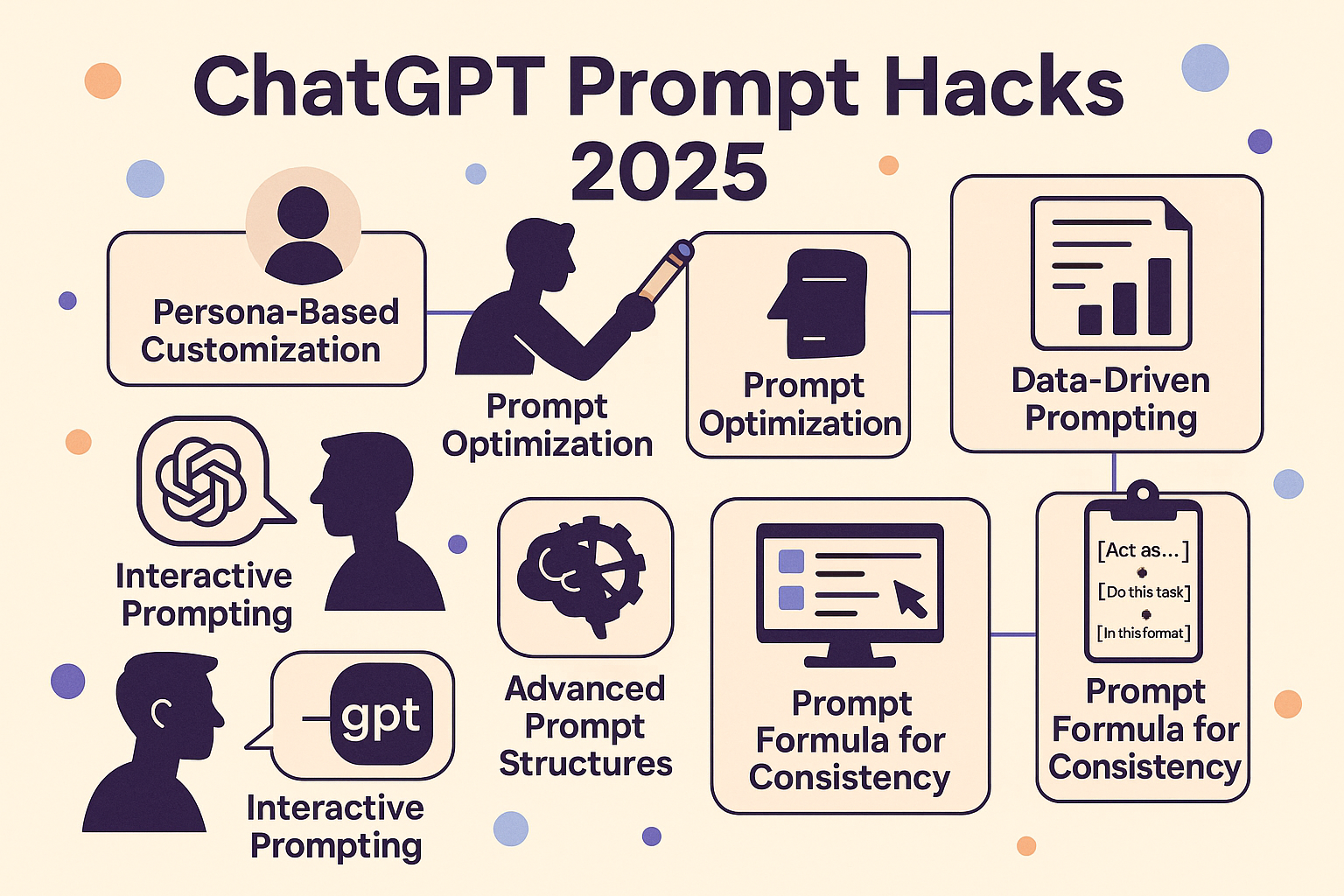

- Limit open-ended questions: When using ChatGPT, try to frame your questions or prompts in a way that encourages specific, factual answers. Open-ended questions can lead to the generation of speculative or false information.

- Stay informed: Keep yourself up-to-date on the latest news, research, and developments in various fields. This will help you discern the accuracy of the information provided by ChatGPT.

- Encourage AI developers to improve models: As users, it is crucial to provide feedback to AI developers when encountering false information generated by their models. This feedback can help them refine their algorithms and reduce the occurrence of false information in the future.

Conclusion

While ChatGPT is an impressive AI language model with numerous applications, it is essential to be aware of its limitations and the potential for generating false information. By understanding these limitations, employing strategies to identify and prevent misinformation, and staying informed, general consumers can better navigate the world of AI-generated content and make well-informed decisions.